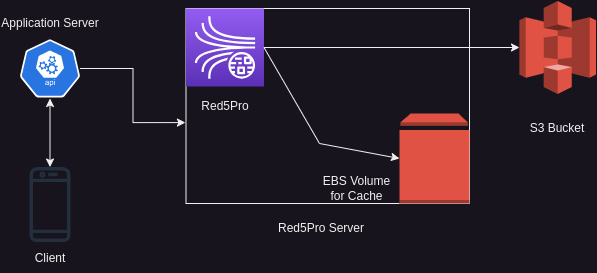

For video streaming, we are using Red5 Pro software, hosted in AWS EC2 instance. For the second time, we have encountered an issue of out of storage. Since we notice the issue repeatedly, we investigate the reasons and also come to a permanent solution.

First let me explain the application architecture and then I will explain, how we resolve the issue.

We had a feature to save the streaming video and we utilize a S3 bucket for this purpose.

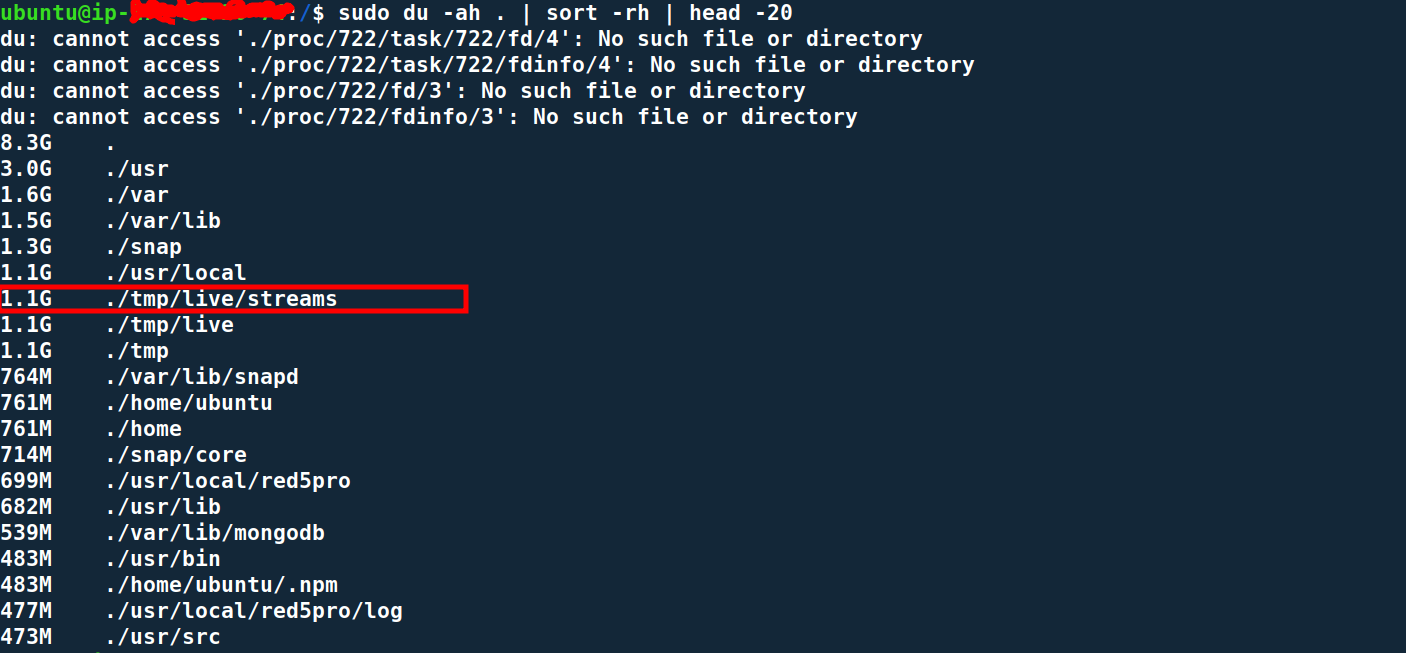

We found out, even though, we store files in the S3 bucket, the Red5Pro use the /tmp/live/streams directory to store these files. This cause the running out of storage issue.

This article is separated into two sections. In our very first section, we will figure out the issue. In the following section, we will discuss how come we resolve this particular issue.

Find the folder/files/application for our storage shortage

First clean the cached packages from apt (Advance Package Tool)

<code>sudo apt-get clean</code>Code language: HTML, XML (xml)Now remove packages these were installed as dependencies of other packages but no longer required,

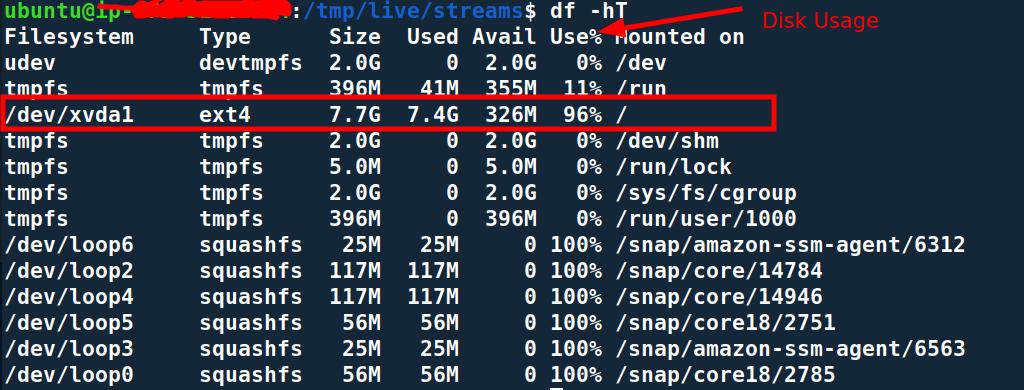

sudo apt-get autoremoveCode language: JavaScript (javascript)Now time to investigate what is going on with our file storage. Lets check our disk spaces,

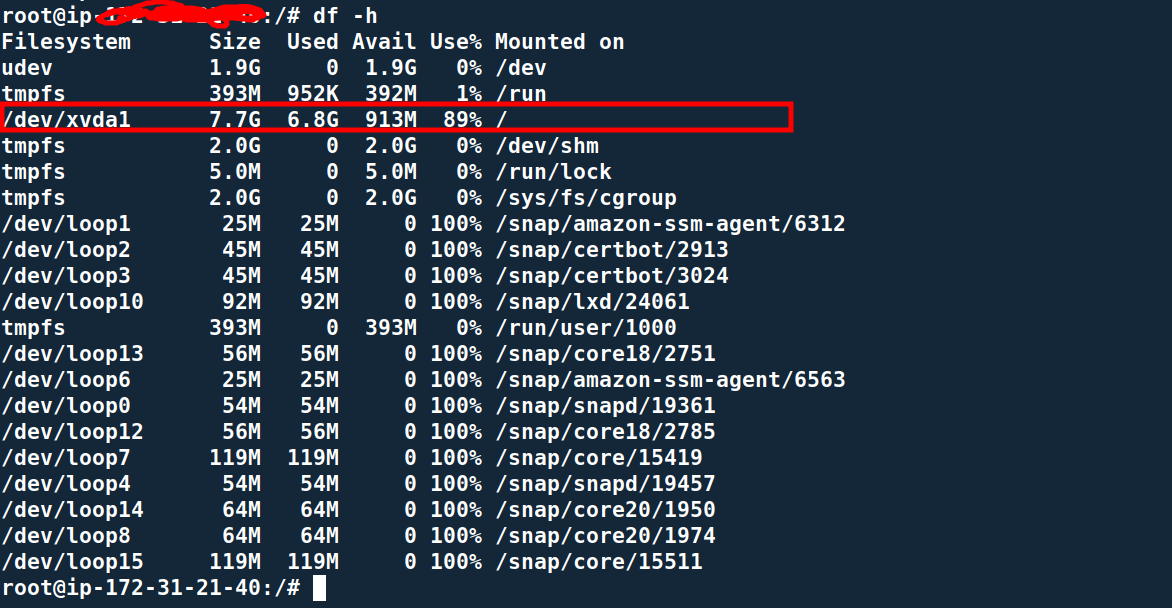

df -h

With this query, we can check the disk usage, specially with the column named Use%.

Hopefully, from here, you might confirm you are actually running out of storage.

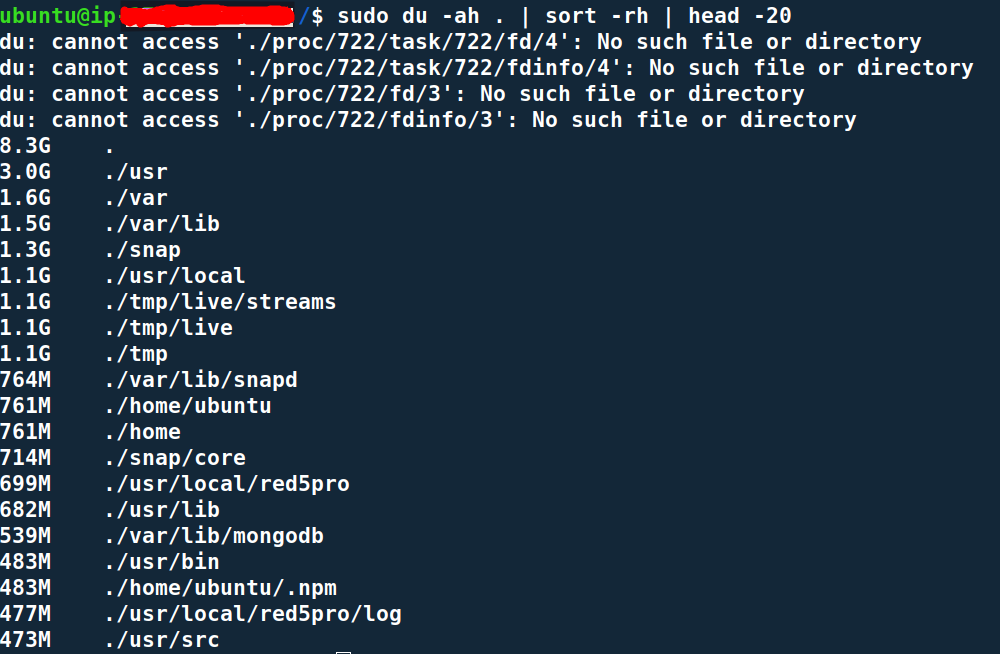

Now we need to dig deeper, to find out which application is responsible for this unfortunate situation. We will take top 20 files/directories (According to your situation, you might need more than 20) of file system.

First go to the root directory,

cd /Be a root/super-user,

sudo suNow run the following command,

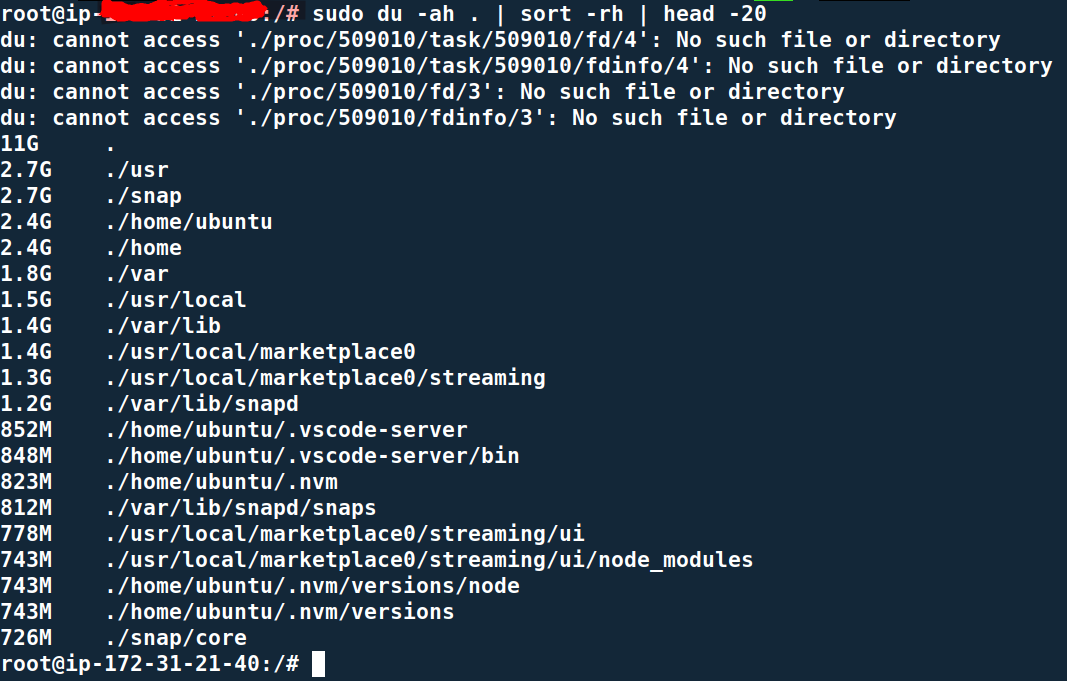

sudo du -ah . | sort -rh | head -20

This will resulting the top 20 larger files/directories.

Now look closely and figure out which directory/files are taking unusual storage’s.

This is a way you will figure out the core reason of short storage.

Another example can be, we have a VS code running in the server. It took almost ~1 GB of storage. During investigation, first we look for the directory,

df -h

It shows our /dev/xvda is using 89% of the total storage and we need to clean up the partition. To find out which directory or application is taking all these storage, from the root (/) directory we can simply run,

sudo du -ah . | sort -rh | head -20

It shows, the cloud VS code server is using ~1GB of the storage. Now In our case, the VS code was installed temporary in the server and it’s no longer required now. We now can delete the VS code server directory and restore the empty spaces.

Possible Solutions for storage issue

Here, we can see, our Red5 Pro server is persisting files inside the /temp/live/streams directory. The fast and easiest solution is to delete the contents of the directory.

But this is a temporary solution and in future, it will store the video files again and we will encounter this short storage issue repeatedly. Instead we could use one of the following scalable solutions,

- Using a tools called tmpreaper. This will remove the files that has not been accessed for a certain days.

- Using CRON job to delete these files by ourselves with specific time period

Since usage of tmpreaper is self explanatory, we will discuss how we can make use of the CRON job.

To add a CRON job, first you must install CRON. In Ubuntu, it is installed by default. If CRON is not available in your system, first install it and make sure the CRON service is up and running by,

systemctl status cronIn Ubuntu, the CRON job is written in /etc/crontab. So update the file with relevant file deletion task. In my case, I have to delete the files from /tmp/live/streams/, when the files are 2 days older and this operation requires the super-user/root level permission.

In this particular case, instead of using /etc/crontab, we will utilize sudo crontab -e.

As task in the cron job, I will use the find operation and delete files inside the /tmp/live/streams/.

We have to add the following line that will find all the files older than 2 days and delete accordingly.

0 2 * * * /usr/bin/find /tmp/live/streams/ -type f -mtime +2 -exec rm -rf {} \;Code language: JavaScript (javascript)First open the file as root/super-user,

sudo crontab -eNow add the line,

0 2 * * * /usr/bin/find /tmp/live/streams/ -type f -mtime +2 -exec rm -rf {} \;Code language: JavaScript (javascript)Save the file and CRON service is smart to detect the change. We do not have to restart the service.

So from now on, our CRON service will delete the unnecessary files from the /tmp directory with specific time frame repeatedly.

Check out blog posts from one of our developers on Linux Shell (bash) Programming Guidelines and 7 Linux Shell (bash) Programming Unusual Syntax that Can Bother.

References:

- https://manpages.ubuntu.com/manpages/lunar/en/man8/tmpreaper.8.html

- https://www.red5pro.com/docs/special/cloudstorage-plugin/file-cleanup/

Add a Comment